S3 bucket object versioning on Eumetsat Elasticity

S3 bucket versioning allows you to keep different versions of the file stored on object storage. Here are some typical use cases:

data recovery and backup

accidental deletion protection

collaboration and document management

application testing and rollbacks

change tracking for large datasets

file synchronization and archiving

In this article, you will learn how to

set up S3 bucket object versioning on Eumetsat Elasticity OpenStack

download different versions of files and

set up automatic removal of previous versions.

Prerequisites

No. 1 Account

You need a Eumetsat Elasticity hosting account with access to Horizon interface: https://horizon.cloudferro.com/auth/login/?next=/.

No. 2 AWS CLI installed on your local computer or virtual machine

AWS CLI is a free and open source software which can manage different clouds, not only those hosted by Amazon Web Services. In this article, you will use it to control your resources hosted on Eumetsat Elasticity cloud.

This article was written for Ubuntu 22.04. The commands may work on other operating systems, but might require adjusting.

Here is how to install AWS CLI on Ubuntu 22.04:

sudo apt install awscli

No. 3 Generated EC2 credentials

To authenticate to Eumetsat Elasticity cloud when using AWS CLI, you need to use EC2 credentials. If you don’t have them yet, check How to generate and manage EC2 credentials on Eumetsat Elasticity

No. 4 Bucket naming rules

Over the course of this article, you will create several buckets. Make sure that you know the rules regarding what characters are allowed in bucket names. See section Creating a new object storage container of How to use Object Storage on Eumetsat Elasticity to learn more.

No. 5 Terminology: container vs. bucket

In this article, both “container” and “bucket” represent the same category of resources hosted on Eumetsat Elasticity cloud. The former term is more often used by the Horizon dashboard and the latter term is more often used by AWS CLI.

What We Are Going To Cover

Configuring and testing AWS CLI

Configure AWS CLI

Verify that AWS CLI is working

Assigning bucket names to shell variables

Making sure that bucket names are unique

Creating a bucket without versioning

Enabling versioning on a bucket

Uploading file

S3 paths

Uploading another version of a file

Listing available versions of a file

Example 1: One file, two versions

Example 2: Multiple files, multiple versions

Downloading a chosen version of a file

Deleting objects on version-enabled buckets

Setting up a deletion marker

Removing deletion marker

Permanently removing a file from version-enabled bucket

Using lifecycle policy to configure automatic deletion of previous versions of files

Preparing the testing environment

Setting up automatic removal of previous versions

Deleting lifecycle policy

Suspending versioning

Bucket on which versioning has never been enabled

Suspending of versioning

Configuring and testing AWS CLI

Now follows how to configure AWS CLI for the first time; if it has been configured before, you might need to adjust the configuration according to the needs of this article.

Step 1: Configure AWS CLI

To configure AWS CLI, create a folder called .aws in your home directory:

mkdir ~/.aws

In it, create a text file called credentials

touch ~/.aws/credentials

Navigate to .aws folder:

cd ~/.aws

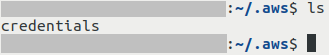

This is how listing of the contents of your .aws folder could now look like:

Open file credentials in plain text editor of your choice (like nano or vim). If you are using nano, this is the command:

nano credentials

Enter the following:

[default]

aws_access_key_id = <<YOUR_ACCESS_KEY>>

aws_secret_access_key = <<YOUR_SECRET_KEY>>

Replace <<YOUR_ACCESS_KEY>> and <<YOUR_SECRET_KEY>> with your access and secret key, respectively.

Save the file and exit the text editor..

The commands we provide in this article will have the appropriate endpoint already included, via the --endpoint-url parameter, and all you need to do is to select the command for the cloud that you are using.

Step 2: Verify that AWS CLI is working

Execute command list-buckets to list buckets:

aws s3api list-buckets \

--endpoint-url https://s3.waw3-1.cloudferro.com

The output should be in JSON format. If you have a bucket named bucket1 and another bucket named bucket2, this is how it could look like:

{

"Buckets": [

{

"Name": "bucket1",

"CreationDate": "2023-11-14T08:55:38.526Z"

},

{

"Name": "bucket2",

"CreationDate": "2024-01-30T10:11:44.157Z"

}

],

"Owner": {

"DisplayName": "my-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

}

Here:

Value of key Buckets should contain a list of your buckets (names and creation dates)

Value of key Owner should contain name and ID of your project

Note

In this article, colors have been added to make JSON more legible. AWS CLI typically does not output colored text.

Assigning bucket names to shell variables

To differentiate between different buckets used in various examples of this article, we will use the following shell variables:

- bucket_name1

used in majority of examples in this article

- bucket_name2

for uploading file to non-root directory

- bucket_name3

for using lifecycle policy

- bucket_name4

bucket on which versioning has never been enabled, used as introduction to suspending of versioning

- bucket_name5

example of suspending of versioning

Choose names for these five buckets. Make sure to follow naming rules from Prerequisite No. 4.

Assign the names to these variables, for example:

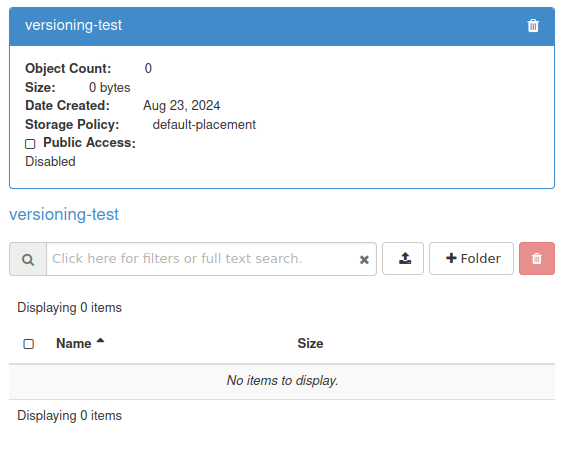

bucket_name1="versioning-test"

bucket_name2="examplebucket"

bucket_name3="testnoncurrent"

bucket_name4="no-versioning"

bucket_name5="anotherbucket"

Important

The shell variables will be valid as long as your terminal session is active. When you start a new terminal session, you will need to reassign these variables. Therefore, you should write them down somewhere so that you don’t lose them.

To use content of a shell variable as an argument of a command, you need to prefix the name of the variable with $. An example command to create a bucket whose name is stored in variable $bucket_name1 (where <<SOME_URL>> is the URL of the endpoint):

aws s3api create-bucket \

--endpoint-url <<SOME_URL>> \

--bucket $bucket_name1

Making sure that bucket names are unique

If single tenancy is enabled on the cloud you are using, the name of your bucket needs to be unique for the entire cloud. Buckets called versioning-test, examplebucket etc. may well already exist. If that is the case, the output from create-bucket command will look like this:

argument of type 'NoneType' is not iterable

To create unique names, you can, for example, add a unique string to the base bucket name. Consider adding

your initials,

date and time

a random number

a UUID (random string of characters and digits)

or even a combination of these methods.

As a practical example, on Ubuntu 22.04, use command called uuidgen to generate a UUID:

uuidgen

The output should contain a UUID:

889fa8de-9623-4735-99c7-9f1567e2a965

Then you can copy this generated number and add it to the bucket name, for instance:

bucket_name1="versioning-test-889fa8de-9623-4735-99c7-9f1567e2a965"

If needed, make sure to repeat this process for each variable.

The best course of action is to store bucket names somewhere safe as there are two scenarios possible:

- Reusing the existing buckets

Maybe the terminal was rebooted at some point but you want to continue working through the article. Or, you have previously used the article to create several buckets and now you want to continue using them.

- Avoid using the existing buckets

Go through the article without previous baggage, using a “clean slate” approach. This is what you would normally do when using the article for the very first time.

Creating a bucket without versioning

Command create-bucket will create a bucket under your chosen name (variable $bucket_name1).

aws s3api create-bucket \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name1

The output of this command should be empty if everything went well.

Enabling versioning on a bucket

To enable versioning on the bucket $bucket_name1, use command put-bucket-versioning:

aws s3api put-bucket-versioning \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name1 \

--versioning-configuration MFADelete=Disabled,Status=Enabled

Note

On Amazon Web Services, the presence of parameter MFADelete increases security by requiring two security factors when changing versioning status or removing file version. Here we disable it for simplicity.

The output of this command should also be empty.

Uploading file

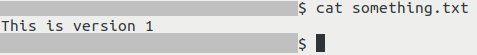

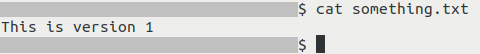

Let’s say that we upload a file to the root directory of our container. Let the name of the file be something.txt and let it have the following content: This is version 1.

We are in the folder which contains that file and we execute command put-object to upload the file to our bucket:

aws s3api put-object \

--endpoint-url https://s3.waw3-1.cloudferro.com\

--bucket $bucket_name1 \

--body something.txt \

--key something.txt

In this command, the values of parameters are:

- --body

the name of the file within our local file system

- --key

the location (like file name) under which the file is to be saved on the container.

We get output like this:

{

"ETag": "\"a4d8980efbd9b71f416595a3d5588b32\"",

"VersionId": "whrj2pDFrrFq0WLdH0zGzprfkebQykf"

}

This upload created the first version of the file. The ID of this version is whrj2pDFrrFq0WLdH0zGzprfkebQykf (value of key VersionId). You can write down it as you will use it again to access the bucket.

The output also provides an ETag key, which is a hash of the object you uploaded.

S3 paths

The parameter --key from put-object command may also be used in other commands to reference an already uploaded bucket in the container.

If used without slashes, as in the example above, the file is in the root directory.

If your file is outside of the root directory, value of parameter --key needs to include directory in which it is located. When providing this path, separate directories and files with forward slashes (/). Contrary to the Linux file system, do not add slash to the beginning of the path. If your bucket contains

directory called place1, which contains

another directory, place2, which, in turn, contains

file called

myfile.txt

this is how to specify its path for --key parameter:

--key place1/place2/myfile.txt

To practice, you can create a new bucket whose name is stored in variable $bucket2

aws s3api create-bucket \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name2

After that, you can create a file named myfile.txt and upload it to above mentioned directory of that bucket:

aws s3api put-object \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name2 \

--body myfile.txt \

--key place1/place2/myfile.txt

Uploading another version of a file

Let us now return to $bucket_name1

We already have file something.txt in the root directory of the container, in the cloud.

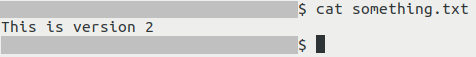

Let’s use your local computer to modify it so that it contains string This is version 2.

Then, we use the same command, put-object, to upload the modified file to the same location on the bucket:

aws s3api put-object \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name1 \

--key something.txt \

--body something.txt

The output is similar, but this time it contains a different VersionId: whrj2pDFrrFq0WLdH0zGzprfkebQykf.

{

"ETag": "\"ded190b85763d32ce9c09a8aef51f44c\"",

"VersionId": "t22ZzEq6kt5ILKFfLZgoeSzW.I9HVtN"

}

To list all files in that bucket, execute command list-objects :

aws s3api list-objects \

--bucket $bucket_name1 \

--endpoint-url https://s3.waw3-1.cloudferro.com

The output will be similar to this:

{

"Contents": [

{

"Key": "something.txt",

"LastModified": "2024-08-23T10:32:30.259Z",

"ETag": "\"ded190b85763d32ce9c09a8aef51f44c\"",

"Size": 18,

"StorageClass": "STANDARD",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

}

],

"RequestCharged": null

}

Here is what the parameters mean:

- Key

name and/or path of the file.

- LastModified

timestamp of when the file was last modified.

- Etag

the hash of the file.

- Size

size of the file in bytes.

- StorageClass

information regarding the storage class (STANDARD in this example).

- Owner

information about your project - name (parameter DisplayName) and ID.

In the example above, the bucket still contains only one file - something.txt. This upload overwrote it with a new version, but the previous version is still present.

Listing available versions of a file

Example 1: One file, two versions

To list the available versions of files in this bucket, use list-object-versions:

aws s3api list-object-versions \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name1

The output could look like this:

{

"Versions": [

{

"ETag": "\"ded190b85763d32ce9c09a8aef51f44c\"",

"Size": 18,

"StorageClass": "STANDARD",

"Key": "something.txt",

"VersionId": "t22ZzEq6kt5ILKFfLZgoeSzW.I9HVtN",

"IsLatest": true,

"LastModified": "2024-08-23T10:32:30.259Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

},

{

"ETag": "\"a4d8980efbd9b71f416595a3d5588b32\"",

"Size": 18,

"StorageClass": "STANDARD",

"Key": "something.txt",

"VersionId": "whrj2pDFrrFq0WLdH0zGzprfkebQykf",

"IsLatest": false,

"LastModified": "2024-08-23T10:19:24.943Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

}

],

"RequestCharged": null

}

It contains two versions we created previously. Each has its own ID, which is the value of parameter VersionId:

Key |

VersionId |

something.txt |

t22ZzEq6kt5ILKFfLZgoeSzW.I9HVtN |

something.txt |

whrj2pDFrrFq0WLdH0zGzprfkebQykf |

Both of them are tied to the same file called something.txt.

Example 2: Multiple files, multiple versions

Let us now consider an alternative situation in which we have two files, and one of them has two versions.

The output of list-object-versions could then look like this:

{

"Versions": [

{

"ETag": "\"adda90afa69e725c2f551e0722014726\"",

"Size": 575,

"StorageClass": "STANDARD",

"Key": "something1.txt",

"VersionId": "kv7QRQsfHhEe-T6c9g-v3uIPoyX6FTs",

"IsLatest": true,

"LastModified": "2024-08-26T16:10:49.979Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890qwertyuiopasdfghjklzxc"

}

},

{

"ETag": "\"947073995b23baa9a565cf21bf56a2ba\"",

"Size": 6,

"StorageClass": "STANDARD",

"Key": "something2.txt",

"VersionId": "no1KrA3MbEtjIk1CnN5U.rTtFKFXSpj",

"IsLatest": true,

"LastModified": "2024-08-26T16:12:29.961Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890qwertyuiopasdfghjklzxc"

}

},

{

"ETag": "\"7d7b28bafa5222d9083fa4ea7e97cff6\"",

"Size": 106,

"StorageClass": "STANDARD",

"Key": "something2.txt",

"VersionId": "gRYReY1SpVI3rS-Qp0NYDPofoAfGfc7",

"IsLatest": false,

"LastModified": "2024-08-26T16:11:21.584Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890qwertyuiopasdfghjklzxc"

}

}

],

"RequestCharged": null

}

Key |

VersionId |

something1.txt |

kv7QRQsfHhEe-T6c9g-v3uIPoyX6FTs |

something2.txt |

no1KrA3MbEtjIk1CnN5U.rTtFKFXSpj |

something2.txt |

gRYReY1SpVI3rS-Qp0NYDPofoAfGfc7 |

File something1.txt has one version, while file something2.txt has two versions..

Downloading a chosen version of the file

Let us return to $bucket_name1.

To download version of file stored on that bucket called something.txt which has VersionId of whrj2pDFrrFq0WLdH0zGzprfkebQykf, we execute get-object command:

aws s3api get-object \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name1 \

--key something.txt \

--version-id whrj2pDFrrFq0WLdH0zGzprfkebQykf \

./something.txt

In this command:

- --key

is the name and/or location of the file on your bucket

- --version-id

is the ID of the chosen version of the file

- ./something.txt

is the name and/or location on your local file system to which you want to download the file. Iff there is already a file there, it will be overwritten.

The file should be downloaded and we should get output like this:

{

"AcceptRanges": "bytes",

"LastModified": "Fri, 23 Aug 2024 10:19:24 GMT",

"ContentLength": 18,

"ETag": "\"a4d8980efbd9b71f416595a3d5588b32\"",

"VersionId": "whrj2pDFrrFq0WLdH0zGzprfkebQykf",

"ContentType": "binary/octet-stream",

"Metadata": {}

}

The file should be in our current working directory:

Displaying its contents with the cat command reveals that it is indeed the first version of that file:

Deleting objects on version-enabled buckets

AWS CLI includes command delete-object which is used to delete files stored on buckets. It behaves differently depending on the circumstances:

On regular buckets, it will simply delete the specified file.

On version-enabled buckets, there are two possibilities:

- Version to be deleted is not specified

The command will not delete the specified file but will, instead, place a deletion marker into the file.

- The version to be deleted is specified

The specified version will be deleted permanently.

Here are the examples for version-enabled buckets.

Setting up a deletion marker

The command to delete files from buckets is delete-object.

Let us try to delete file named something.txt from bucket $bucket_name1 and let us NOT specify the version:

aws s3api delete-object \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name1 \

--key something.txt

This command should return output like this:

{

"DeleteMarker": true,

"VersionId": "A0hVZCX0z6yMrlmoYymeaGPT4nzInS2"

}

The marker we just placed causes the file to be invisible when listing files normally. VersionId is useful if you, say, want to remove that marker and restore the file.

To fully see the effect of delete-object command, we list objects again using list-objects:

aws s3api list-objects \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name1

This time, the output does not list any files - the file something.txt became invisible.

If there are no files to list, you might get the following output:

{

"RequestCharged": null

}

or your output might be empty.

If we list versions of files in our bucket using list-object-versions, for instance:

aws s3api list-object-versions \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name1

we will see that the previous versions are still there:

{

"Versions": [

{

"ETag": "\"ded190b85763d32ce9c09a8aef51f44c\"",

"Size": 18,

"StorageClass": "STANDARD",

"Key": "something.txt",

"VersionId": "t22ZzEq6kt5ILKFfLZgoeSzW.I9HVtN",

"IsLatest": false,

"LastModified": "2024-08-23T10:32:30.259Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

},

{

"ETag": "\"a4d8980efbd9b71f416595a3d5588b32\"",

"Size": 18,

"StorageClass": "STANDARD",

"Key": "something.txt",

"VersionId": "whrj2pDFrrFq0WLdH0zGzprfkebQykf",

"IsLatest": false,

"LastModified": "2024-08-23T10:19:24.943Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

}

],

"DeleteMarkers": [

{

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

},

"Key": "something.txt",

"VersionId": "A0hVZCX0z6yMrlmoYymeaGPT4nzInS2",

"IsLatest": true,

"LastModified": "2024-08-23T11:28:48.128Z"

}

],

"RequestCharged": null

}

Apart from the previously uploaded versions, a delete marker (key DeleteMarkers) with version ID of A0hVZCX0z6yMrlmoYymeaGPT4nzInS2 can also be found.

Note

If your bucket contains additional files, they too will be listed here.

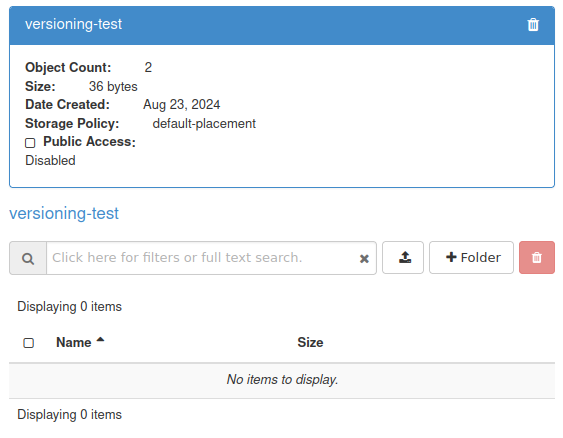

Within the Horizon dashboard, the file is also “invisible”:

Even though the file cannot be seen, the size of the bucket is still displayed correctly - 36 bytes. Each stored version of each file adds to the total size.

Removing the deletion marker

To restore the visibility of a file, delete its deletion marker by issuing command delete-object and specify the VersionID of the deletion marker:

aws s3api delete-object \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name1 \

--key something.txt \

--version-id A0hVZCX0z6yMrlmoYymeaGPT4nzInS2

In this command:

something.txt is the name of the file

A0hVZCX0z6yMrlmoYymeaGPT4nzInS2 is the VersionID of the deletion marker which was obtained while following the previous section of this article.

Warning

Make sure to enter the correct VersionID to prevent accidental deletion of important data!

We get the following output:

{

"DeleteMarker": true,

"VersionId": "A0hVZCX0z6yMrlmoYymeaGPT4nzInS2"

}

Once again, let us list object versions using command list-object-versions:

aws s3api list-object-versions \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name1

The delete marker no longer exists:

{

"Versions": [

{

"ETag": "\"ded190b85763d32ce9c09a8aef51f44c\"",

"Size": 18,

"StorageClass": "STANDARD",

"Key": "something.txt",

"VersionId": "t22ZzEq6kt5ILKFfLZgoeSzW.I9HVtN",

"IsLatest": true,

"LastModified": "2024-08-23T10:32:30.259Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

},

{

"ETag": "\"a4d8980efbd9b71f416595a3d5588b32\"",

"Size": 18,

"StorageClass": "STANDARD",

"Key": "something.txt",

"VersionId": "whrj2pDFrrFq0WLdH0zGzprfkebQykf",

"IsLatest": false,

"LastModified": "2024-08-23T10:19:24.943Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

}

],

"RequestCharged": null

}

And if we list files with list-objects:

s3api list-objects \

--bucket $bucket_name1 \

--endpoint-url https://s3.waw3-1.cloudferro.com

the output once again shows one file - something.txt:

{

"Contents": [

{

"Key": "something.txt",

"LastModified": "2024-08-23T10:32:30.259Z",

"ETag": "\"ded190b85763d32ce9c09a8aef51f44c\"",

"Size": 18,

"StorageClass": "STANDARD",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

}

],

"RequestCharged": null

}

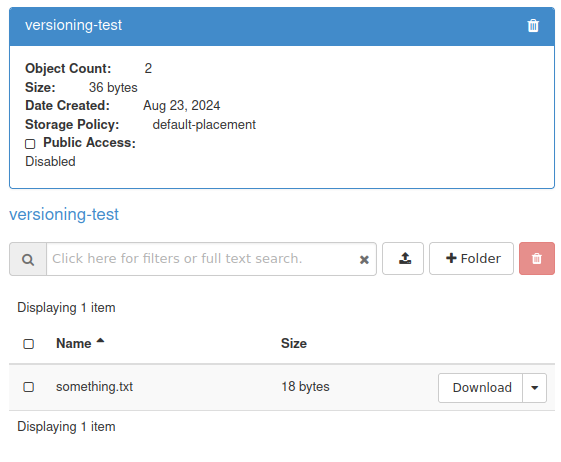

The file should now also be visible in Horizon again:

That on this screenshot, the visible file has size 18 bytes, whereas the total size of this bucket is 36 bytes. This is because the total size includes both stored versions of the file.

Permanently removing files from version-enabled bucket

You can delete versions of file stored in the bucket just like you can delete the previously mentioned delete marker.

The two versions of file something.txt, t22ZzEq6kt5ILKFfLZgoeSzW.I9HVtN and whrj2pDFrrFq0WLdH0zGzprfkebQykf still exist in bucket $bucket_name1.

To delete the first version permanently, we use command delete-object similar to the one used to remove the deletion marker. The difference is that here we specify the VersionID which we want to remove.

aws s3api delete-object \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name1 \

--key something.txt \

--version-id t22ZzEq6kt5ILKFfLZgoeSzW.I9HVtN

We should get output like this:

{

"VersionId": "t22ZzEq6kt5ILKFfLZgoeSzW.I9HVtN"

}

When we list versions of files stored on bucket with list-object-versions:

aws s3api list-object-versions \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name1

the output will show us only one version of one file:

{

"Versions": [

{

"ETag": "\"a4d8980efbd9b71f416595a3d5588b32\"",

"Size": 18,

"StorageClass": "STANDARD",

"Key": "something.txt",

"VersionId": "whrj2pDFrrFq0WLdH0zGzprfkebQykf",

"IsLatest": true,

"LastModified": "2024-08-23T10:19:24.943Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

}

],

"RequestCharged": null

}

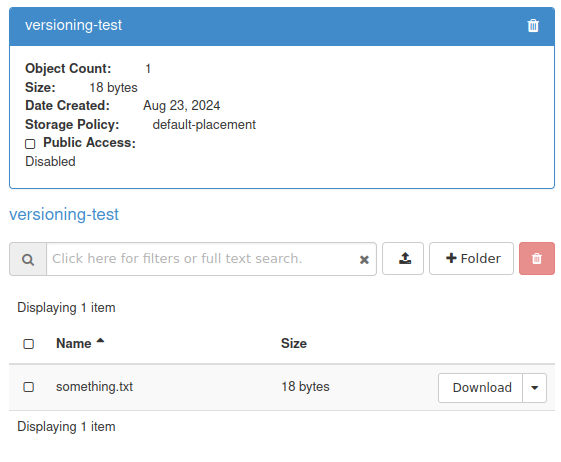

In the Horizon dashboard, the total size of the bucket was reduced to 18 bytes:

If we delete the last version using command delete-object,

aws s3api delete-object \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name1 \

--key something.txt \

--version-id whrj2pDFrrFq0WLdH0zGzprfkebQykf

the last file from Horizon dashboard should disappear and the size of the bucket should be reduced to zero bytes:

If we now execute the list-object-versions command:

aws s3api list-object-versions \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name1

we will see that there are no files or versions there:

{

"RequestCharged": null

}

Using lifecycle policy to configure automatic deletion of previous versions of files

“Noncurrent version” is any version of a file which is not the latest. In this section, we will cover how to configure automatic deletion of these versions after a specified amount of days.

For this purpose, we will use function called “lifecycle policy”.

This example covers configuring automatic removal of noncurrent versions of a file 1 day after a newer version of the same file has been uploaded.

Preparing the testing environment

For testing, create bucket whose name is stored in variable $bucket_name3 and enable versioning:

aws s3api create-bucket \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name3

aws s3api put-bucket-versioning \

--endpoint-url https://s3.waw3-1.cloudferro.com}} \

--bucket $bucket_name3 \

--versioning-configuration MFADelete=Disabled,Status=Enabled

For the sake of this article, let us suppose that we are in a folder which contains the following two files:

mycode.py

announcement.md

The actual content of these files is not important here.

We upload these files to $bucket_name3 using the standard put-object command:

aws s3api put-object \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name1 \

--body mycode.py \

--key mycode.py

aws s3api put-object \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name1 \

--body announcement.md \

--key announcement.md

To see these files after upload, execute list-object-versions on the bucket:

aws s3api list-object-versions \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name3

We get the following output:

{

"Versions": [

{

"ETag": "\"d185982da39fb33854a5b49c8e416e07\"",

"Size": 34,

"StorageClass": "STANDARD",

"Key": "announcement.md",

"VersionId": "r714CQ6MLAo4l300Fv9iBCqfNpESPpN",

"IsLatest": true,

"LastModified": "2024-10-04T14:51:26.015Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

},

{

"ETag": "\"6cf02e36dd1dc8b58ea77ba4a94291f2\"",

"Size": 21,

"StorageClass": "STANDARD",

"Key": "mycode.py",

"VersionId": ".qBE6Dx91dxnU7aYOzmBMM1qRg3QwAx",

"IsLatest": true,

"LastModified": "2024-10-04T14:51:41.115Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

}

],

"RequestCharged": null

}

To test automatic deleting of previous versions, we modify file named mycode.py on our local computer and upload it one more time using put-object:

aws s3api put-object \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name3 \

--body mycode.py \

--key mycode.py

Executing list-object-versions again:

aws s3api list-object-versions \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name3

confirms that one of the files has two versions while the other only has one version:

{

"Versions": [

{

"ETag": "\"d185982da39fb33854a5b49c8e416e07\"",

"Size": 34,

"StorageClass": "STANDARD",

"Key": "announcement.md",

"VersionId": "r714CQ6MLAo4l300Fv9iBCqfNpESPpN",

"IsLatest": true,

"LastModified": "2024-10-04T14:51:26.015Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

},

{

"ETag": "\"3a474b21ab418d007ad677262dfed5b6\"",

"Size": 39,

"StorageClass": "STANDARD",

"Key": "mycode.py",

"VersionId": "tYJ6IazGryIWjv4iwSM1mLTW4-AnhMN",

"IsLatest": true,

"LastModified": "2024-10-04T14:55:07.223Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

},

{

"ETag": "\"6cf02e36dd1dc8b58ea77ba4a94291f2\"",

"Size": 21,

"StorageClass": "STANDARD",

"Key": "mycode.py",

"VersionId": ".qBE6Dx91dxnU7aYOzmBMM1qRg3QwAx",

"IsLatest": false,

"LastModified": "2024-10-04T14:51:41.115Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

}

],

"RequestCharged": null

}

Contrary to other versions of files stored here, the first version of file mycode.py has false under the key IsLatest. This shows that this is not the latest version of that file.

Setting up automatic removal of previous versions

The lifecycle policy is written in JSON. Create file named noncurrent-policy.json in your current working directory (doesn’t have to be the location of the file which contains your login credentials) and enter the following code into it:

{

"Rules": [

{

"ID": "NoncurrentVersionExpiration",

"Filter": {

"Prefix": ""

},

"Status": "Enabled",

"NoncurrentVersionExpiration": {

"NoncurrentDays": 1

}

}

]

}

Replace 1 with the number of days after which noncurrent versions are to be deleted.

In this example, we will apply this policy to bucket $bucket_name1. The command is put-bucket-lifecycle-configuration:

aws s3api put-bucket-lifecycle-configuration \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name3 \

--lifecycle-configuration file://noncurrent-policy.json

The output should be empty.

To verify that the policy was applied, execute the get-bucket-lifecycle-configuration command:

aws s3api get-bucket-lifecycle-configuration \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name3

In the output, you should see the policy which you applied:

{

"Rules": [

{

"ID": "NoncurrentVersionExpiration",

"Filter": {

"Prefix": ""

},

"Status": "Enabled",

"NoncurrentVersionExpiration": {

"NoncurrentDays": 1

}

}

]

}

Versions of files which are not the latest should now be removed after 1 day.

In this example, logging in after one day and executing list-object-versions again:

aws s3api list-object-versions \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name3

reveals that the version of file mycode.py which is not the latest was deleted:

{

"Versions": [

{

"ETag": "\"d185982da39fb33854a5b49c8e416e07\"",

"Size": 34,

"StorageClass": "STANDARD",

"Key": "announcement.md",

"VersionId": "r714CQ6MLAo4l300Fv9iBCqfNpESPpN",

"IsLatest": true,

"LastModified": "2024-10-04T14:51:26.015Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

},

{

"ETag": "\"3a474b21ab418d007ad677262dfed5b6\"",

"Size": 39,

"StorageClass": "STANDARD",

"Key": "mycode.py",

"VersionId": "tYJ6IazGryIWjv4iwSM1mLTW4-AnhMN",

"IsLatest": true,

"LastModified": "2024-10-04T14:55:07.223Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

}

],

"RequestCharged": null

}

Deleting lifecycle policy

Command delete-bucket-lifecycle deletes bucket lifecycle policy. This is how to do it on bucket $bucket_name3.

aws s3api delete-bucket-lifecycle \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name3

The output of this command should be empty.

To verify, we once again check the current lifecycle configuration:

aws s3api get-bucket-lifecycle-configuration \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name3

This command should return either an empty output or:

argument of type 'NoneType' is not iterable

The policy should now no longer apply.

Suspending versioning

If you no longer want to store multiple versions of files, you can suspend the versioning.

Bucket on which versioning has never been enabled

To better understand how it works, let us start with a bucket in which versioning has never been enabled in the first place.

On such a bucket, every file will only have one version which has one and the same ID, namely, null.

If you upload another file under the same name, its VersionID will also be null, and will replace the previously uploaded file.

Example

For this example, we will create bucket $bucket_name4 on which versioning has never been enabled.

aws s3api create-bucket \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name4

Buckets can, of course, contain files of various type. For the sake of this example, suppose that the bucket contains the following three files of various types:

File |

Editor |

document.odt |

LibreOffice |

screenshot1.png |

GIMP, Krita etc. |

script.sh |

nano, vim etc. |

The actual content of these files is not important here. You can use the editors from this table to create the files and then upload them with put-object:

aws s3api put-object \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name1 \

--body document.odt \

--key document.odt

aws s3api put-object \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name1 \

--body screenshot1.png \

--key screenshot1.png

aws s3api put-object \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name1 \

--body script.sh \

--key script.sh

First, let’s try to execute the previously mentioned list-object-versions command on this bucket:

aws s3api list-object-versions \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name4

Example output:

{

"Versions": [

{

"ETag": "\"5064a9c6200fd7dae7c25f2ed01a6f8f\"",

"Size": 9639,

"StorageClass": "STANDARD",

"Key": "document.odt",

"VersionId": "null",

"IsLatest": true,

"LastModified": "2024-09-16T11:19:02.425Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

},

{

"ETag": "\"e3fedcd58235e90e7a676a84cd6c7ee6\"",

"Size": 174203,

"StorageClass": "STANDARD",

"Key": "screenshot1.png",

"VersionId": "null",

"IsLatest": true,

"LastModified": "2024-09-16T11:17:17.085Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

},

{

"ETag": "\"5600fdc5aa752cba9895d985a9cf709e\"",

"Size": 36,

"StorageClass": "STANDARD",

"Key": "script.sh",

"VersionId": "null",

"IsLatest": true,

"LastModified": "2024-09-16T11:17:47.206Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

}

],

"RequestCharged": null

}

All of these files have only one version and it has null as its ID.

Let’s say that we locally modify one of these three files (here we are using script.sh) and upload the modified version under the same name and key.

For this purpose, from within the folder which contains our file, we execute the following put-object command:

aws s3api put-object \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name4 \

--body script.sh \

--key script.sh

For confirmation, we should get output containing the ETag:

{

"ETag": "\"b6b82cb2376934bcf6877705bae6ac58\""

}

If we list object versions again with list-object-versions:

aws s3api list-object-versions \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name4

we should get the output like this:

{

"Versions": [

{

"ETag": "\"5064a9c6200fd7dae7c25f2ed01a6f8f\"",

"Size": 9639,

"StorageClass": "STANDARD",

"Key": "document.odt",

"VersionId": "null",

"IsLatest": true,

"LastModified": "2024-09-16T11:19:02.425Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

},

{

"ETag": "\"e3fedcd58235e90e7a676a84cd6c7ee6\"",

"Size": 174203,

"StorageClass": "STANDARD",

"Key": "screenshot1.png",

"VersionId": "null",

"IsLatest": true,

"LastModified": "2024-09-16T11:17:17.085Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

},

{

"ETag": "\"b6b82cb2376934bcf6877705bae6ac58\"",

"Size": 60,

"StorageClass": "STANDARD",

"Key": "script.sh",

"VersionId": "null",

"IsLatest": true,

"LastModified": "2024-10-02T10:16:11.589Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

}

],

"RequestCharged": null

}

Once again, there are three files, each with exactly one version stored. The file script.sh was overwritten during our upload - its parameters Size, ETag and LastModified (timestamp of last modification) have changed.

Suspending of versioning

When you suspend the versioning, your bucket will start behaving similarly to a bucket on which versioning has never been enabled. All files uploaded from that moment on will have null as their VersionId.

Let’s say that after suspending of versioning, you upload a file to the same key (name and location within the bucket) as a previously existing file. What happens next depends on whether the bucket already contains a version of that file with VersionID of null:

If version which has null as its VersionId does not exist, the version you are uploading will become a new version of that file.

If version which has null as its VersionId does exist, the version you are uploading will overwrite the previous version of that file with VersionId of null.

Either way, the newly uploaded version will have VersionId of null.

This overwrite will also happen if version which has null as its VersionId is the only remaining version of the file.

Suspending of versioning by itself will not, however, influence previously saved versions which do not have VersionId of null. You can delete them manually if you want to.

In order to illustrate suspending of versioning, we will create a new bucket $bucket_name5. First create this bucket:

aws s3api create-bucket \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name5

and then enable versioning on it:

aws s3api put-bucket-versioning \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name5 \

--versioning-configuration MFADelete=Disabled,Status=Enabled

Upload a few files to this bucket with put-object command. Make sure that at least one of them has multiple versions.

In this example, our bucket contains the following files:

Key |

VersionId |

file1.txt |

CTv9FT1Wp9pxDZdlZXx2cJ5C2juPNA6 |

file1.txt |

eaJNZLZTqtPAq9l09Nrm-CN-UAVtFHQ |

file2.txt |

HVRcuAOQ.gpqiU50mJkdAj4bAvgfCFN |

We can list all these versions using list-object-versions:

aws s3api list-object-versions \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name5

The output:

{

"Versions": [

{

"ETag": "\"1f5f1ebe10ac3457ca87427e1772d71f\"",

"Size": 10,

"StorageClass": "STANDARD",

"Key": "file1.txt",

"VersionId": "CTv9FT1Wp9pxDZdlZXx2cJ5C2juPNA6",

"IsLatest": true,

"LastModified": "2024-09-16T11:28:47.501Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

},

{

"ETag": "\"174b29d6d688c2b34f6c1bb7361a8b7e\"",

"Size": 10,

"StorageClass": "STANDARD",

"Key": "file1.txt",

"VersionId": "eaJNZLZTqtPAq9l09Nrm-CN-UAVtFHQ",

"IsLatest": false,

"LastModified": "2024-09-16T11:28:10.006Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

},

{

"ETag": "\"174b29d6d688c2b34f6c1bb7361a8b7e\"",

"Size": 10,

"StorageClass": "STANDARD",

"Key": "file2.txt",

"VersionId": "HVRcuAOQ.gpqiU50mJkdAj4bAvgfCFN",

"IsLatest": true,

"LastModified": "2024-09-16T11:28:20.830Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

}

],

"RequestCharged": null

}

To suspend versioning on this bucket, execute put-bucket-versioning:

aws s3api put-bucket-versioning \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name5 \

--versioning-configuration MFADelete=Disabled,Status=Suspended

The output of this command should be empty.

We list versions of files again with list-object-versions:

aws s3api list-object-versions \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name5

The output shows us that the previous versions of files have not been removed:

{

"Versions": [

{

"ETag": "\"1f5f1ebe10ac3457ca87427e1772d71f\"",

"Size": 10,

"StorageClass": "STANDARD",

"Key": "file1.txt",

"VersionId": "CTv9FT1Wp9pxDZdlZXx2cJ5C2juPNA6",

"IsLatest": false,

"LastModified": "2024-09-16T11:28:47.501Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

},

{

"ETag": "\"174b29d6d688c2b34f6c1bb7361a8b7e\"",

"Size": 10,

"StorageClass": "STANDARD",

"Key": "file1.txt",

"VersionId": "eaJNZLZTqtPAq9l09Nrm-CN-UAVtFHQ",

"IsLatest": false,

"LastModified": "2024-09-16T11:28:10.006Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

},

{

"ETag": "\"174b29d6d688c2b34f6c1bb7361a8b7e\"",

"Size": 10,

"StorageClass": "STANDARD",

"Key": "file2.txt",

"VersionId": "HVRcuAOQ.gpqiU50mJkdAj4bAvgfCFN",

"IsLatest": true,

"LastModified": "2024-09-16T11:28:20.830Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

}

],

"RequestCharged": null

}

We then

modify the contents of previously uploaded file file1.txt on our local computer and

upload that file again, with put-object:

aws s3api put-object \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name5 \

--body file1.txt \

--key file1.txt

After successful upload, we again list all versions (command list-versions) of files in our bucket:

{

"Versions": [

{

"ETag": "\"4d3828bb564834c45a522e3492cbdf4a\"",

"Size": 10,

"StorageClass": "STANDARD",

"Key": "file1.txt",

"VersionId": "null",

"IsLatest": true,

"LastModified": "2024-09-16T11:31:01.968Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

},

{

"ETag": "\"1f5f1ebe10ac3457ca87427e1772d71f\"",

"Size": 10,

"StorageClass": "STANDARD",

"Key": "file1.txt",

"VersionId": "CTv9FT1Wp9pxDZdlZXx2cJ5C2juPNA6",

"IsLatest": false,

"LastModified": "2024-09-16T11:28:47.501Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

},

{

"ETag": "\"174b29d6d688c2b34f6c1bb7361a8b7e\"",

"Size": 10,

"StorageClass": "STANDARD",

"Key": "file1.txt",

"VersionId": "eaJNZLZTqtPAq9l09Nrm-CN-UAVtFHQ",

"IsLatest": false,

"LastModified": "2024-09-16T11:28:10.006Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

},

{

"ETag": "\"174b29d6d688c2b34f6c1bb7361a8b7e\"",

"Size": 10,

"StorageClass": "STANDARD",

"Key": "file2.txt",

"VersionId": "HVRcuAOQ.gpqiU50mJkdAj4bAvgfCFN",

"IsLatest": true,

"LastModified": "2024-09-16T11:28:20.830Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

}

],

"RequestCharged": null

}

The previous versions of this file were not replaced, but a new version of file file1.txt (which has VersionId of null), was uploaded.

From now on, each uploaded file will be uploaded with VersionId of null. If this version of that file already exists, it will be replaced.

To illustrate that, we

once again modify the file file1.txt on our local computer and

upload this modified version again, using put-object:

aws s3api put-object \

--endpoint-url https://s3.waw3-1.cloudferro.com \

--bucket $bucket_name5 \

--body file1.txt \

--key file1.txt

After this upload, we list versions one more time and get the following output:

{

"Versions": [

{

"ETag": "\"c96e9d7d1e4655b15493cc31ab7cfc24\"",

"Size": 10,

"StorageClass": "STANDARD",

"Key": "file1.txt",

"VersionId": "null",

"IsLatest": true,

"LastModified": "2024-09-16T11:34:25.528Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

},

{

"ETag": "\"1f5f1ebe10ac3457ca87427e1772d71f\"",

"Size": 10,

"StorageClass": "STANDARD",

"Key": "file1.txt",

"VersionId": "CTv9FT1Wp9pxDZdlZXx2cJ5C2juPNA6",

"IsLatest": false,

"LastModified": "2024-09-16T11:28:47.501Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

},

{

"ETag": "\"174b29d6d688c2b34f6c1bb7361a8b7e\"",

"Size": 10,

"StorageClass": "STANDARD",

"Key": "file1.txt",

"VersionId": "eaJNZLZTqtPAq9l09Nrm-CN-UAVtFHQ",

"IsLatest": false,

"LastModified": "2024-09-16T11:28:10.006Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

},

{

"ETag": "\"174b29d6d688c2b34f6c1bb7361a8b7e\"",

"Size": 10,

"StorageClass": "STANDARD",

"Key": "file2.txt",

"VersionId": "HVRcuAOQ.gpqiU50mJkdAj4bAvgfCFN",

"IsLatest": true,

"LastModified": "2024-09-16T11:28:20.830Z",

"Owner": {

"DisplayName": "this-project",

"ID": "1234567890abcdefghijklmnopqrstuv"

}

}

],

"RequestCharged": null

}

Once again, there is only one version which has null as its ID - the upload overwrote the previous version. The date of last modification (LastModified) has changed. Its previous value was 2024-09-16T11:31:01.968Z and now it is 2024-09-16T11:34:25.528Z

What To Do Next

AWS CLI is not the only available way of interacting with object storage. Other ways include:

- Horizon dashboard

- s3fs

How to Mount Object Storage Container as a File System in Linux Using s3fs on Eumetsat Elasticity

- Rclone

/s3/How-to-mount-object-storage-container-from-Eumetsat-Elasticity-as-file-system-on-local-Windows-computer

- s3cmd

How to access object storage from Eumetsat Elasticity using s3cmd